This essay was originally published on Substack and is archived here as part of my ongoing work on political sentiment analysis.

Where watchdogs think AI is today — where the science shows it’s going — and why the Right cannot sit this out.

Dec 16, 2025

Last week I wrote about two landmark academic findings:

- A Nature study showing that AI-driven conversations can persuade voters more effectively than traditional ads

- A Science paper explaining how AI persuasion actually works, including the trade-offs between persuasiveness and accuracy

Together, those papers establish a clear reality:

AI is no longer a marginal campaign tool.

AI is becoming a primary persuasion medium.

Now comes the next phase of the story.

A new report from the Center for Democracy & Technology (CDT) lays out how campaigns are currently using AI — and, more importantly, how watchdogs believe AI should be governed in elections.

This report matters.

Not because it’s wrong across the board.

But because it reveals a growing gap between where AI campaign policy thinking is anchored and where the science, and the field, are actually heading.

That gap is where conservatives must engage.

Where AI Was in 2024 — and Why CDT Starts There

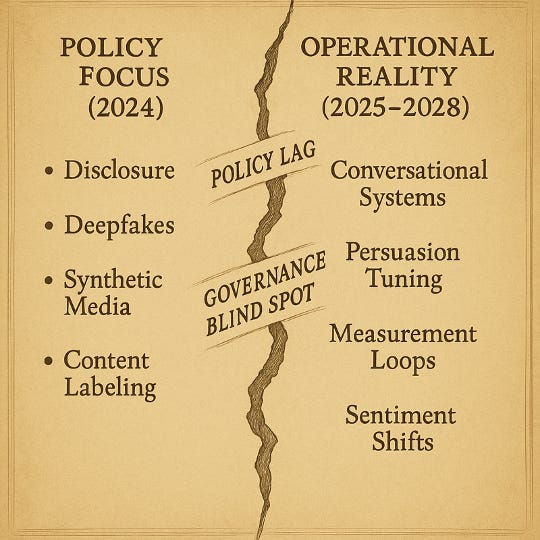

The CDT report largely reflects AI as it existed and was understood in 2024:

- Generative text tools used for drafting direct mail, emails, fundraising copy, and TV/Radio ads

- Image and video tools raising fears about deepfakes

- Automation risks tied to misinformation and disclosure

- A general concern that “AI might influence voters in unpredictable ways”

From that starting point, CDT’s recommendations focus on:

- Transparency and disclosure

- Limits on deceptive synthetic media

- Guardrails against misinformation

- Ethical norms for campaign use

On these points, CDT aligns with the science more than critics might admit.

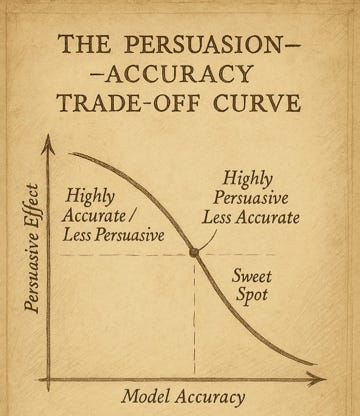

The Science paper explicitly warns that more persuasive models tend to become less accurate.

The Nature paper acknowledges risks around scale and misuse.

The concern that persuasion power can outpace truth safeguards is legitimate.

So let’s be clear:

CDT is right to be concerned about scale, opacity, and abuse.

But that’s only half the picture.

Where CDT Conflicts with the Science

Where the CDT report falls short is not intent, but understanding.

The report consistently treats “AI” as a single category:

- AI-generated content

- AI tools

- AI automation

- AI persuasion

From a policy perspective, this is convenient.

From a scientific and strategic perspective, it is deeply flawed.

The science makes something very clear:

Not all AI persuades, and persuasion does not come from “AI” generically.

The Science paper shows that:

- Personalization does not meaningfully increase persuasion

- Model size does not determine influence

- Emotional tone is not the driver

Instead, persuasion comes from:

- Structured reasoning

- Information density

- Post-training tuning

- Conversational feedback loops

In other words:

Persuasion is a mechanical process and not a side effect of content generation.

The CDT report does not engage with this at all.

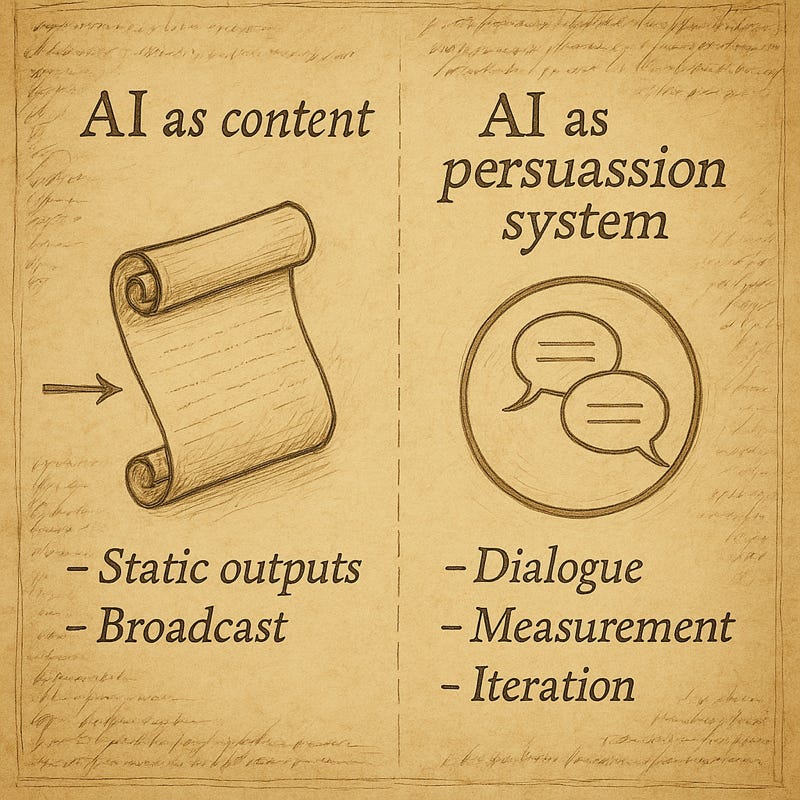

It warns about AI as a tool, but it does not distinguish between:

- Broadcast-style AI content

- Synthetic media

- Automated spam

- Conversational persuasion systems

That distinction matters — because governing all AI the same way will fail.

Why This Distinction Matters for Conservatives

Conservatives have seen this movie before.

In the early days of data analytics and digital campaigning:

- Democrats invested deeply in data and experimentation

- Republicans hesitated, skeptical of the tools and the culture behind them or slowed by those who defaulted to dusty tools they understood

- By the time the Right engaged seriously, the rules, norms, and platforms were already shaped

The same dynamic is emerging with AI.

Progressive NGOs, academic institutions, and tech companies are:

- Writing the first policy frameworks

- Framing the risk narrative

- Defining what is “acceptable” AI use

- Setting disclosure and enforcement standards

If conservatives stay quiet now, the result is predictable:

Rules will be written by people who do not understand persuasion mechanics — and do not share conservative assumptions about speech, agency, or voter autonomy.

Where the Science, and the Field, Are Actually Going

Here’s the reality CDT has not yet caught up to:

AI persuasion is moving away from content generation and toward measurable conversational systems.

In large-scale deployments we’ve been involved in over the past several months, we’ve seen:

- Hundreds of thousands of real conversations per week

- Clear identification of persuadables

- Measurable movement during the conversation itself

- Structured reasoning outperform emotional appeals

- Feedback loops that allow continuous adjustment

This aligns precisely with the Science paper’s conclusion:

Persuasion is driven by post-training tuning and argument structure and not personalization or scale alone.

That means the future of AI in campaigns is not:

- Fake videos

- Mass-produced talking points

- Automated misinformation

It is:

- Dialogue

- Measurement

- Iteration

- Accountability

But none of that shows up clearly in the CDT report because it’s still anchored to yesterday’s version of AI.

Why EyesOver (and Platforms Like It) Matter in This Debate

This is where conservatives can, and should, lead.

EyesOver was built around the assumption that:

- Persuasion must be measurable

- Movement matters more than impressions

- Conversations matter more than broadcasts

- AI must be auditable, testable, and iterative

SparkFire, as an engagement layer, demonstrated early what the academic literature is now confirming:

that structured, conversational persuasion works and can be measured responsibly.

This doesn’t mean every AI system should be allowed.

It means policymakers must distinguish between:

- Reckless automation

- And structured persuasion systems with controls, metrics, and guardrails

Blanket fear-based regulation will miss this entirely.

The Strategic Moment We’re In

The CDT report is a signal.

It tells us:

- The policy world is paying attention

- Norms are being drafted

- The conversation is moving fast

But it also tells us:

- The people shaping those norms do not understand how persuasion actually works

- The science is ahead of the policy (as is always the case)

- The field, right now, is ahead of both

This is the window where conservatives must engage — not to block regulation, but to shape it intelligently.

The Bottom Line

In 2024, AI in campaigns meant:

- Drafting copy

- Generating images

- Speeding up workflows

In 2026 and beyond, AI in campaigns means:

- Persuasion as a system

- Conversation as the unit of influence

- Measurement as the guardrail

The Nature paper proved AI persuasion is real.

The Science paper explained how it works.

The CDT report shows the governance fight has begun.

If conservatives do not participate now — thoughtfully, technically, and credibly — the rules will be written without us.

And by the time everyone realizes what AI persuasion has become, it will be too late to influence how it is governed.

Leave a comment